redis的pipeline机制在某些场景下能够提高web服务qps,降低redis-server端的cpu损耗。比如同时多次请求redis数据,请求结果之间互不依赖;或者替换lua数组下标方式访问KEYS[i],避免集群环境脚本访问限制。本文测试pipeline方式对WebServer端和RedisServer端的性能影响。

测试环境及工具

- web-server: mac, golang-gin框架

- redis-server: centos6.5, 3.2.12单机版

- 压力负载: wrk, go-hey

- cpu性能监控: sar, ksar

测试代码

同步多次请求

1 | func Do1(c *gin.Context) { |

pipeline请求

1 | func Do2(c *gin.Context) { |

web-server端性能表现

同步多次请求

1 | wrk -c 30 -t 2 -d 10s 'http://127.0.0.1:8888/do1' |

1 | Running 10s test @ http://127.0.0.1:8888/do1 |

pipeline请求

1 | wrk -c 30 -t 2 -d 10s 'http://127.0.0.1:8888/do2' |

1 | Running 10s test @ http://127.0.0.1:8888/do2 |

redis-server端性能表现

request_load

同步多次请求

1 | hey -c 30 -q 900 -z 40s -m GET http://127.0.0.1:8888/do1 |

1 | Summary: |

pipeline请求

1 | hey -c 30 -q 100 -z 40s -m GET http://127.0.0.1:8888/do2 |

1 | Summary: |

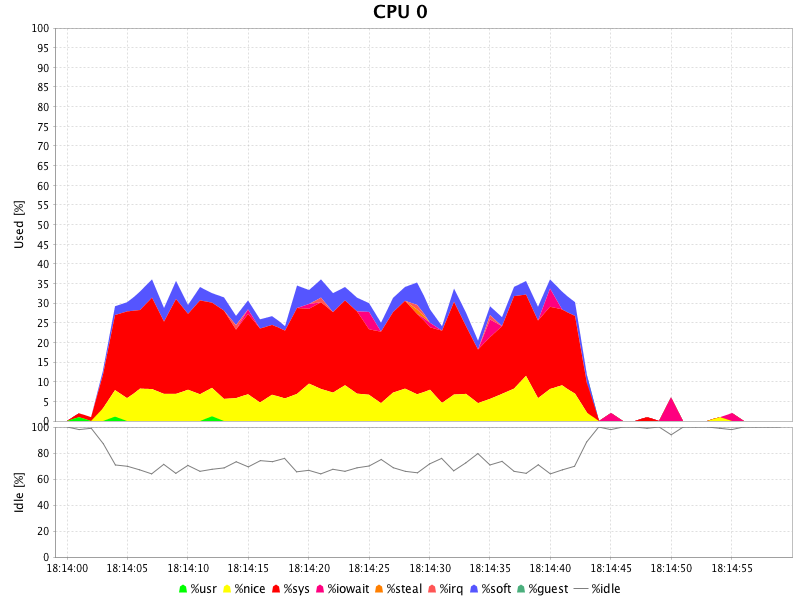

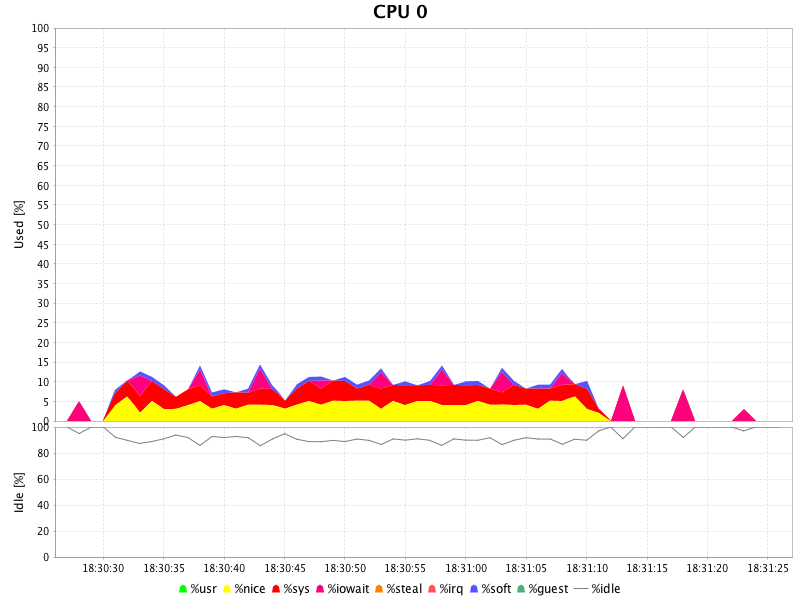

cpu 负载

cpu 负载曲线

结论

测试过程向redis-server请求8次数据,pipeline方式的web-qps是非pipeline方式的3倍;若控制两种方式的qps相同,则pipeline方式的cpu使用率约为非pipeline方式的一半。为提高redis的并发性能提供了一种途径。同时要注意控制单次pipeline的数据量,避免单次请求耗时而阻塞其他redis请求。